To calculate the sum of a column values in PySpark, you can use the sum() function from the pyspark.sql.functions module. You can either use agg() or select() to calculate the Sum of column values for a single column or multiple columns. Lets see how to calculate

- Sum of column values of the single column in pyspark using agg() function and sum() function

- Sum of column values of the single column in pyspark using select() function and sum() function

- Sum of column values of multiple columns in pyspark using sum() and select() function

- Sum of column values of multiple columns in pyspark using sum() and agg() function

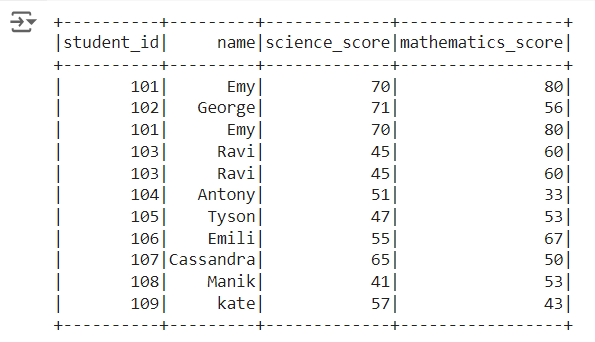

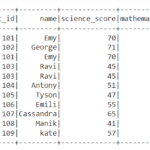

We will use the dataframe named df.

Sum of column values of the single column in pyspark : Method 1 using agg() function

To Calculate Sum of column values of single column you can use sum() function and agg() function as shown below

from pyspark.sql import functions as F

#calculate Sum of column values of column named 'science_score'

df.agg(F.sum('science_score')).collect()[0][0]

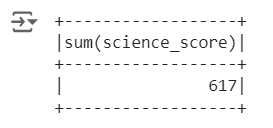

so the resultant Sum of column values of “science_score” column will be

Sum of column values of the single column in pyspark : Method 2 using select() function

To Calculate Sum of column values of single column you can use sum() function and agg() function as shown below

from pyspark.sql.functions import max

#calculate Sum of column values of column named 'science_score'

df.select(sum("science_score")).show()

so the resultant Sum of column values of “science_score” column will be

Sum of column values of multiple columns in pyspark : Method 1 using sum() and agg() function

To calculate the Sum of column values of multiple columns in PySpark, you can use the agg() function, which allows you to apply aggregate functions like sum() to more than one column at a time.

from pyspark.sql.functions import max

#calculate Sum of column values of column named 'science_score' and 'mathematics_score'

df.agg(sum("science_score"),sum("mathematics_score")).show()

agg(sum(“science_score”), sum(“mathematics_score”)): This applies the sum() function to both the columns.

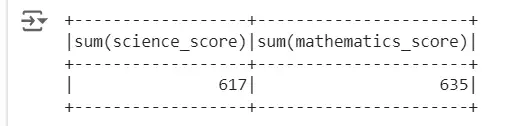

so the resultant Sum of column values of “science_score” and “mathematics_score” column will be

Output:

Sum of column values of multiple columns in pyspark : Method 2 using sum() and select() function

The alternative approach to calculate the Sum of column values of multiple columns in PySpark is using the select() function, If you only need to calculate the Sum of column values without aggregation over groups, you can use select() with multiple sum() functions to calculate Sum of column values with more than one column at a time.

from pyspark.sql.functions import max

#calculate Sum of column values of column named 'science_score' and 'mathematics_score'

df.select(sum("science_score"), sum("mathematics_score")).show()

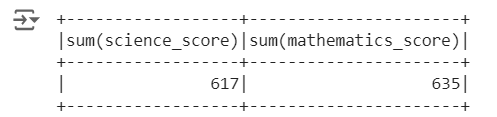

so the resultant Sum of column values of “science_score” and “mathematics_score” column will be

Output:

Other Related Topics:

- Mean of two or more columns in pyspark

- Row wise mean, sum, minimum and maximum in pyspark

- Rename column name in pyspark – Rename single and multiple column

- Typecast Integer to Decimal and Integer to float in Pyspark

- Get number of rows and number of columns of dataframe in pyspark

- Extract Top N rows in pyspark – First N rows

- Absolute value of column in Pyspark – abs() function

- Set Difference in Pyspark – Difference of two dataframe

- Union and union all of two dataframe in pyspark (row bind)

- Intersect of two dataframe in pyspark (two or more)

- Round up, Round down and Round off in pyspark – (Ceil & floor pyspark)

- Sort the dataframe in pyspark – Sort on single column & Multiple column

- Drop rows in pyspark – drop rows with condition

- Distinct value of a column in pyspark

- Distinct value of dataframe in pyspark – drop duplicates

- Count of Missing (NaN,Na) and null values in Pyspark