In order to subset or filter data with conditions in pyspark we will be using filter() function. filter() function subsets or filters the data with single or multiple conditions in pyspark. Let’s get clarity with an example.

- Subset or filter data with single condition

- Subset or filter data with multiple conditions (multiple or condition in pyspark)

- Subset or filter data with multiple conditions (multiple and condition in pyspark)

- Subset or filter data with conditions using sql functions

- Filter using Regular expression in pyspark

- Filter starts with and ends with keyword in pyspark

- Filter with null and non null values in pyspark

- Filter with LIKE% and in operator in pyspark

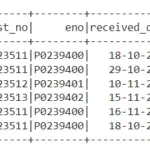

We will be using dataframe df.

Subset or filter data with single condition in pyspark

Subset or filter data with single condition in pyspark can be done using filter() function with conditions inside the filter function.

## subset with single condition df.filter(df.mathematics_score > 50).show()

The above filter function chosen mathematics_score greater than 50. So the dataframe is subsetted or filtered with mathematics_score greater than 50

Subset or filter data with multiple conditions in pyspark (multiple and)

Subset or filter data with multiple conditions can be done using filter() function, by passing the conditions inside the filter functions, here we have used and operators

## subset with multiple conditions with and conditions

df.filter('mathematics_score > 50 and science_score > 50').show()

The above filter function chosen mathematics_score greater than 50 and science_score greater than 50. So the result will be

Subset or filter data with multiple conditions in pyspark (multiple or)

Subset or filter data with multiple conditions can be done using filter function() with conditions inside the filter functions with either or / and operator

## subset with multiple conditions with or conditions

df.filter('mathematics_score > 50 or science_score > 50').show()

The above filter function chosen mathematics_score greater than 50 or science_score greater than 50. So the result will be

Subset or filter data with multiple conditions in pyspark (multiple and spark sql)

Subset or filter data with multiple conditions can be done using filter() function, by passing the conditions inside the filter functions, here we have used & operators

## subset with multiple condition using sql.functions

import pyspark.sql.functions as f

df.filter((f.col('mathematics_score') > 50) & (f.col('science_score') > 50)).show()

The above filter function chosen mathematics_score greater than 50 and science_score greater than 50. So the result will be

Subset or filter data with multiple conditions in pyspark (multiple or spark sql)

Subset or filter data with multiple conditions in pyspark can be done using filter function() and col() function along with conditions inside the filter functions with either or / and operator

## subset with multiple condition using sql.functions

import pyspark.sql.functions as f

df.filter((f.col('mathematics_score') > 60)| (f.col('science_score') > 60)).show()

The above filter function chosen mathematics_score greater than 60 or science_score greater than 60. So the result will be

Filter using Regex with column name like in pyspark:

colRegex() function with regular expression inside is used to select the column with regular expression.

## Filter using Regex with column name like

df.select(df.colRegex("`(mathe)+?.+`")).show()

the above code selects column with column name like mathe%

Filter column name contains in pyspark :

Returns rows where strings of a column contain a provided substring. In our example, filtering by rows which contain the substring “an” would be a good way to get all rows that contains “an”.

## Filter column name contains

df.filter(df.name.contains('an')).show()

So the resultant dataframe will be

Filter row with string starts with in pyspark :

Returns rows where strings of a row start with a provided substring. In our example, filtering by rows which starts with the substring “Em” is shown.

## Filter row with string starts with "Em"

df.filter(df.name.startswith('Em')).show()

So the resultant dataframe will be

Filter row with string ends with in pyspark :

Returns rows where strings of a row end with a provided substring. In our example, filtering by rows which ends with the substring “i” is shown.

## Filter row with string ends with "i"

df.filter(df.name.endswith('i')).show()

So the resultant dataframe will be

- filter(df.name.isNull()): Returns rows where values in a provided column are null.

- filter(df.name.isNotNull()):Returns rows where values in a provided column are not null.

- filter(df.name.like(‘Em%’)).show() : Performs a SQL-like query containing the LIKE clause.

- filter(df.name.rlike(‘[A-Z]*vi$’)).show() : Performs a regexp filter.

- filter(df.name.isin(‘Ravi’, ‘Manik’)).show() : Looks for rows where the string value of a column matches any of the provided strings exactly.

Other Related Topics:

- Drop rows in pyspark – drop rows with condition

- Distinct value of a column in pyspark

- Distinct value of dataframe in pyspark – drop duplicates

- Count of Missing (NaN,Na) and null values in Pyspark

- Mean, Variance and standard deviation of column in Pyspark

- Maximum or Minimum value of column in Pyspark

- Raised to power of column in pyspark – square, cube , square root and cube root in pyspark

- Drop column in pyspark – drop single & multiple columns

- Frequency table or cross table in pyspark – 2 way cross table

- Groupby functions in pyspark (Aggregate functions) – Groupby count, Groupby sum, Groupby mean, Groupby min and Groupby max

- Descriptive statistics or Summary Statistics of dataframe in pyspark

- Rearrange or reorder column in pyspark

- cumulative sum of column and group in pyspark

- Calculate Percentage and cumulative percentage of column in pyspark

- Select column in Pyspark (Select single & Multiple columns)

- Get data type of column in Pyspark (single & Multiple columns)

- Get List of columns and its data type in Pyspark