In PySpark, the error TypeError: Column is not iterable typically occurs when you’re trying to use Python built-in functions (like min, max, etc.) directly on a PySpark Column object. Unlike in Pandas, you can’t apply these functions directly to a DataFrame’s column in PySpark.

we will look at how to handle three errors related to pyspark

- pyparkTypeError : column is not iterable when using min() function, max() function and sum() function

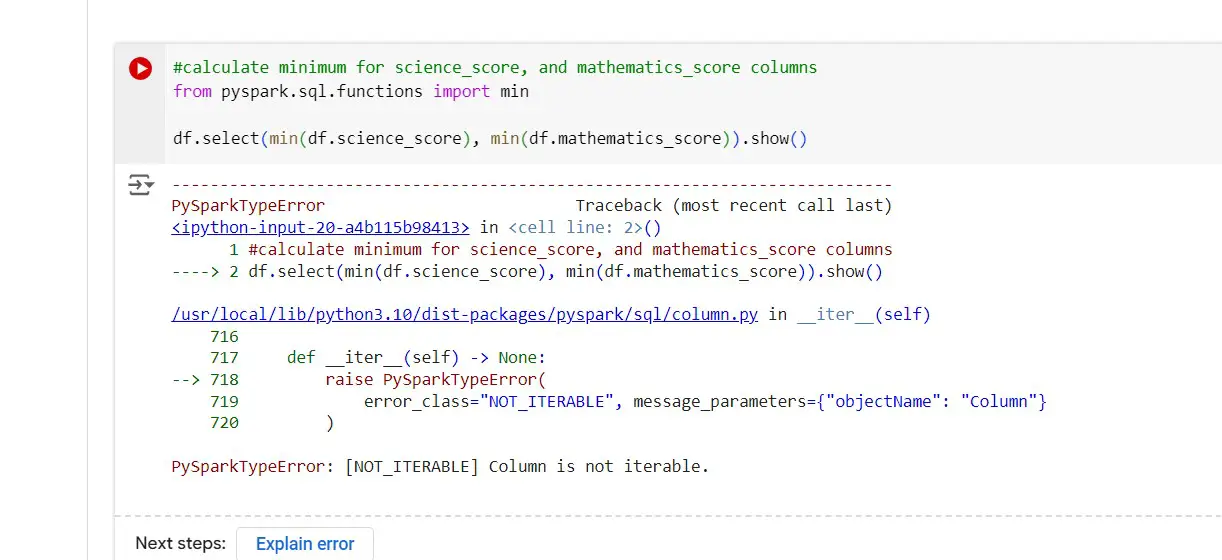

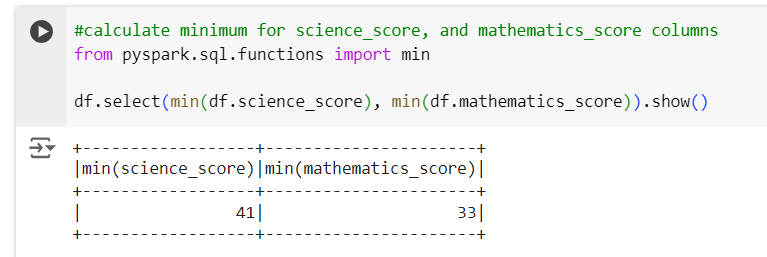

pyparkTypeError : column is not iterable when using min() function

Fix for the Error in pyspark : pyparkTypeError : column is not iterable

Using min() from pyspark.sql.functions: import min

To calculate the mean in PySpark, you can either the min() function from PySpark’s pyspark.sql.functions for that you need to import the “min” from “pyspark.sql.functions” as shown below

#calculate minimum for science_score, and mathematics_score columns from pyspark.sql.functions import min df.select(min(df.science_score), min(df.mathematics_score)).show()

now the import error is gone and here is the output

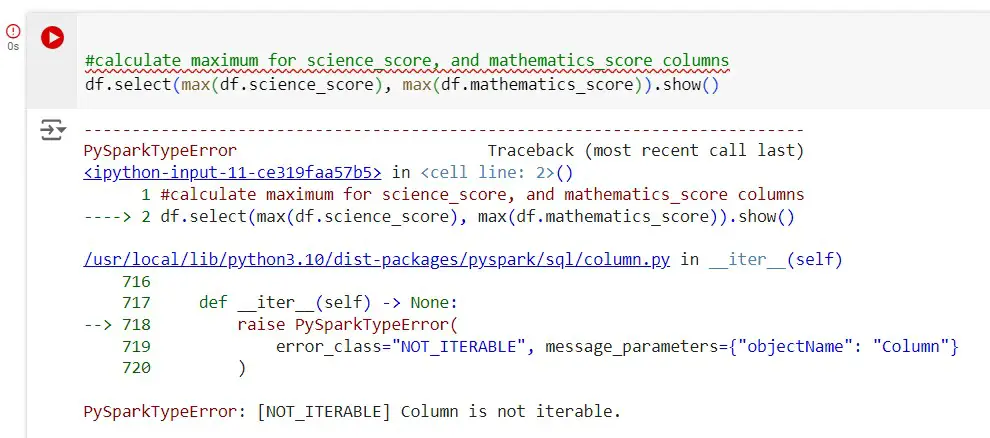

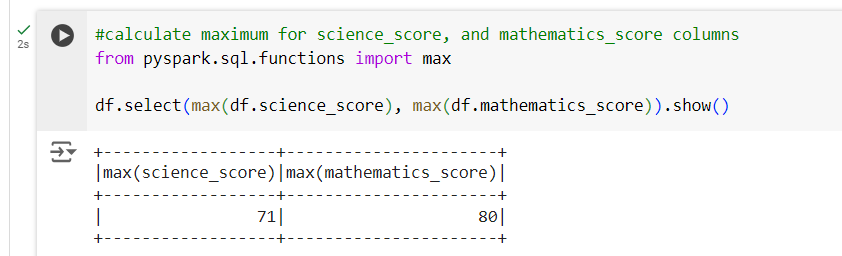

pyparkTypeError : column is not iterable when using max() function

Fix for the Error in pyspark : pyparkTypeError : column is not iterable

Using max() from pyspark.sql.functions: import max

To calculate the mean in PySpark, you can either the max() function from PySpark’s pyspark.sql.functions for that you need to import the “max” from “pyspark.sql.functions” as shown below

#calculate maximum for science_score, and mathematics_score columns from pyspark.sql.functions import max df.select(max(df.science_score), max(df.mathematics_score)).show()

now the import error is gone and here is the output

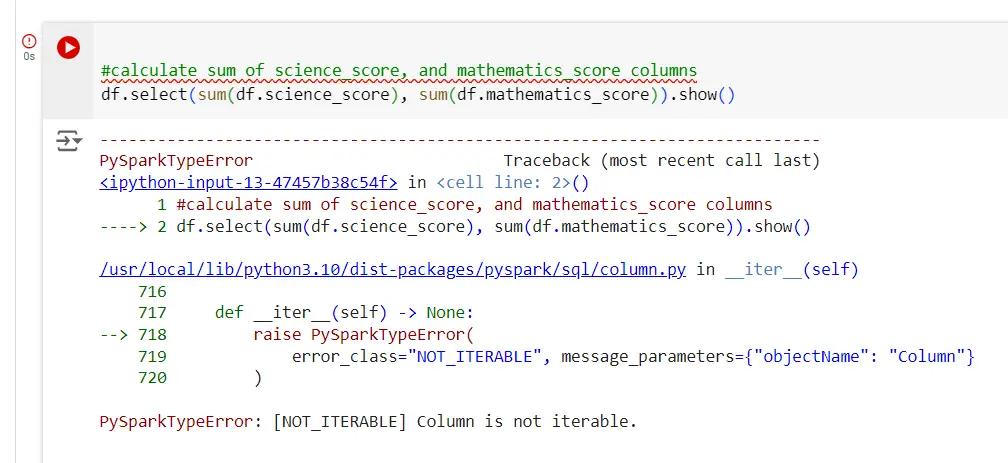

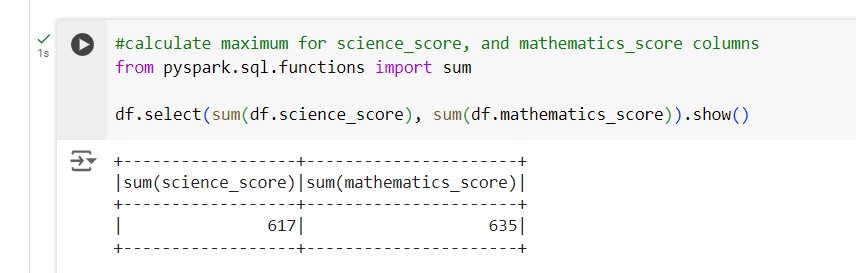

pyparkTypeError : column is not iterable when using sum() function

Fix for the Error in pyspark : pyparkTypeError : column is not iterable

Using sum() from pyspark.sql.functions: import sum

To calculate the mean in PySpark, you can either the sum() function from PySpark’s pyspark.sql.functions for that you need to import the “sum” from “pyspark.sql.functions” as shown below

#calculate sum of science_score, and mathematics_score columns from pyspark.sql.functions import sum df.select(sum(df.science_score), sum(df.mathematics_score)).show()

now the import error is gone and here is the output