In PySpark, you can compute the absolute value of a column in a DataFrame using the abs() function. This function is part of the PySpark.sql.functions module. Absolute method in PySpark – abs() function in PySpark gets the absolute value of the numeric column . Let’s see how to

- Extract absolute value in pyspark using abs() function.

- Extracts the absolute value of the column using abs() method.

With an example for both

Get Absolute value in Pyspark:

abs() function in pyspark gets the absolute value

So the Absolute value will be

Extract Absolute value of the column in Pyspark:

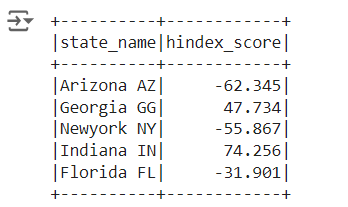

To get absolute value of the column in pyspark, we will using abs() function and passing column as an argument to that function. Lets see with an example the dataframe that we use is df_states

abs() function takes column as an argument and gets absolute value of that column

########## Extract Absolute value of the column in pyspark

from pyspark.sql.functions import abs

df1 = df_states.withColumn('Absolute_Value',abs(df_states.hindex_score))

df1.show()

Explanation:

Importing the necessary libraries: SparkSession for creating a Spark session and abs for calculating absolute values.

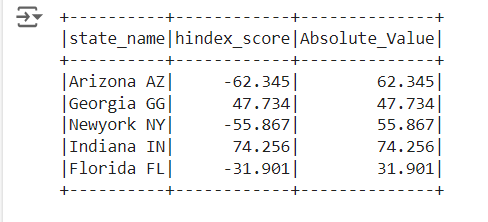

Calculating absolute values: The withColumn method is used to add a new column “Absolute_Value”, which contains the absolute values of the “hindex_score” column.

Displaying results: The updated DataFrames are displayed.

so the resultant absolute column of “hindex_score” is calculated as shown below

Other Related Topics:

- Distinct value of a column in pyspark

- Distinct value of dataframe in pyspark – drop duplicates

- Count of Missing (NaN,Na) and null values in Pyspark

- Mean, Variance and standard deviation of column in Pyspark

- Maximum or Minimum value of column in Pyspark

- Raised to power of column in pyspark – square, cube , square root and cube root in pyspark

- Drop column in pyspark – drop single & multiple columns

- Subset or Filter data with multiple conditions in pyspark

- Frequency table or cross table in pyspark – 2 way cross table

- Groupby functions in pyspark (Aggregate functions) – Groupby count, Groupby sum, Groupby mean, Groupby min and Groupby max

- Descriptive statistics or Summary Statistics of dataframe in pyspark

- Rearrange or reorder column in pyspark

- cumulative sum of column and group in pyspark

- Calculate Percentage and cumulative percentage of column in pyspark